If you create e-newsletters regularly, you might be wondering how you can increase the number of people reading them. In this post I’m going to show you a simple way to you can do this — by using split testing.

What is split testing?

Split testing involves sending different versions of your e-newsletters to some of the people on your mailing list, monitoring the performance of each, and sending the ‘best’ performing one to the remainder of your list.

It generally involves four steps:

- You create two or more versions of your e-newsletter.

- You send these different versions to a small percentage of email addresses on your mailing list.

- You compare how each version of your e-newsletter performs in terms of either opens or click throughs.

- You roll out the best-performing version to the remaining email addresses on your list (depending on the tool you use, this can be done via automation).

What can I test?

There are a variety of things you can test, including:

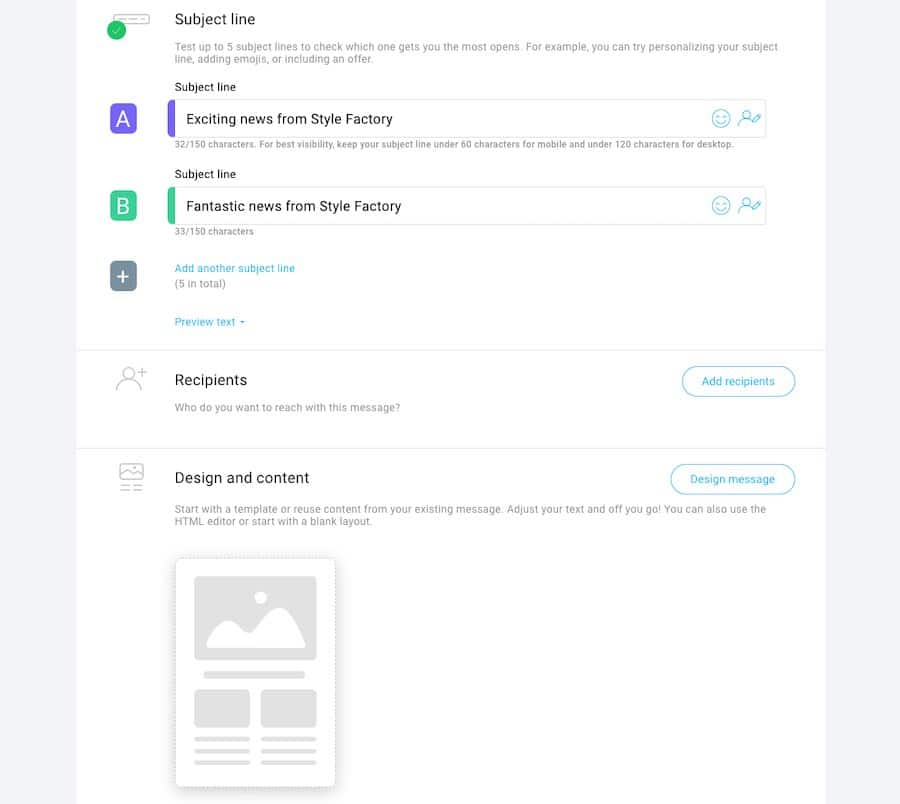

- Subject header — the title of the email that recipients see in their inbox. (Does including the recipient’s name in it help? Is a longer or shorter subject header better?)

- Sender — the person who the email is coming from (for example, open rates may vary depending on whether you send your email using a company name or an individual’s).

- Content — different text or images in the body of your email may elicit different responses to your message, and consequently influence the number of clickthroughs.

- Time of day / week — you can test different send times to see which ones generate the most opens and click-throughs.

With all the above variables, you will need to decide whether to pick a winning e-newsletter based on:

- open rate or

- clickthrough rate.

Open rates are generally used to determine the winner of subject header, sender and time-based tests.

Click-through rates tend to be used as a measure of success when establishing what sort of content to use in an e-newsletter.

Multivariate testing versus A/B Testing

Strictly speaking, there are two types of split testing: ‘A/B testing’ and ‘multivariate’ testing.

A/B testing involves just two versions of an e-newsletter, while multivariate (as the name suggests) involves several.

More sophisticated split testing

If you want to be really clever about things, you could run sequential tests – for example, you could carry out a subject header test, pick a winner and then subsequently run a content-based test using 3 emails sent using that subject header but with different copy in them.

Or alternatively, you could use ‘goals’ as part of your split testing, to see which of your e-newsletters are best at generating conversions for you.

For example, you could test two different versions of your newsletters against each other to see which generated the most sales of your products.

Many email marketing solutions allow you to add code to your post-sales pages on your website that allows you to track these conversions; alternatively, you can use Google Analytics events to do this.

The more complex your tests, however, the more time-consuming it all becomes – you may need to start segmenting lists, spend a lot of time on copywriting and so on.

Accordingly, it’s best to start with the most useful or informative goals rather than trying to get too clever about things, too quickly. You’ll need to weigh up the value of each test you’re doing against the time it will take to carry it out.

But how do I actually carry out a split test?

Most popular email marketing solutions – like GetResponse, Campaign Monitor, AWeber and Mailchimp – come with automatic split testing functionality built in.

This lets you create different versions of your e-newsletter, choose sample sizes, specify whether you want to measure success based on open rates or click-throughs — and then handles the rest of the test by itself, sending the best performing e-newsletter to the remainder of the email addresses on your list automatically.

Split testing and statistical significance

A key thing worth remembering about split tests is that the results have to be statistically significant – otherwise you can’t have confidence in using them.

This means:

- using a large mailing list

- testing using sample sizes that can deliver meaningful results

The maths of split testing is surprisingly complicated, and it is quite easy to run split tests that produce ‘winners’ without actually having any statistical significance.

It’s relatively straightforward to work out correct sample sizes for simple A/B tests involving just two variants of a newsletter — Campaign Monitor provides a good guide to working out the best A/B sample sizes here.

However, establishing the best approach to samples for multivariate tests is tricky. As a rule of thumb though, using larger percentages of your data in tests and running longer tests will generally deliver the most accurate set of results.

Good luck with your split testing, and if you have any queries about the practice at all, just leave them in the comments section below.

Don’t miss out on our free Business Growth E-Kit

For a limited time only, we’re offering our readers some excellent free tools to help them grow their business. Sign up free to immediately receive:

- downloadable cheatsheets on how to grow an online business

- an exclusive discount code for email marketing app GetResponse

- a 30-day free trial of Canva Pro

- extended free trials of essential growth-hacking apps

- ongoing free tips and advice on digital marketing

We respect your privacy, and you can unsubscribe any time. View privacy notice.

No comments